Beyond the Hype: How to Find the Right AI Solution for Your Practice

As artificial intelligence transforms healthcare, medical practices face an overwhelming array of vendor promises and marketing claims. Here’s how to cut through the noise and make informed decisions.

The AI revolution has arrived in healthcare, and with it, a flood of vendors promising to transform your practice overnight. From clinical documentation to diagnostic support, AI tools are being marketed as the solution to every healthcare challenge.

But here’s the reality: not all AI tools are created equal, and many fail to deliver on their promises when implemented in real clinical settings. As someone who has spent years helping medical practices navigate technology decisions, I’ve seen practices invest in solutions that looked impressive in demos but created workflow disruptions and staff frustration in daily use.

The Problem with Vendor-Driven Evaluation

Most practices rely heavily on vendor materials and sales demonstrations when evaluating AI tools. This approach is fundamentally flawed. Vendors naturally present their products in the best possible light, often glossing over integration challenges, workflow disruptions, and real-world limitations.

Consider this: when evaluating a new medication, would you rely solely on pharmaceutical sales representatives? Of course not. You’d consult peer-reviewed studies, colleague experiences, and independent clinical data. Yet when it comes to AI tools that can significantly impact patient care and practice efficiency, many practices skip this critical independent evaluation step.

The Pillars of Successful Healthcare Software

After working with medical practices across specialties and evaluating dozens of AI tools, I’ve developed a systematic approach that focuses on three critical areas that determine success or failure:

Clinical Impact comes first. Before considering any AI tool, practices must clearly define the problem they’re trying to solve. Is it reducing documentation time? Improving diagnostic accuracy? Streamlining administrative tasks? Without a clear problem definition, practices often end up with sophisticated solutions to problems they don’t have.

Security and Compliance represents a binary pass/fail evaluation. If a tool isn’t HIPAA compliant or can’t safely handle PHI, everything else is irrelevant. But don’t just accept vendor assurances—demand detailed compliance documentation and understand exactly how patient data will be handled, stored, and transmitted.

Integration Capabilities often determine whether a tool gets adopted or abandoned. The most sophisticated AI tool is worthless if it can’t integrate with your existing EHR or requires staff to learn entirely new workflows. Ask specific questions: Does this require API development? What’s the setup timeline? How will this fit into our current documentation process?

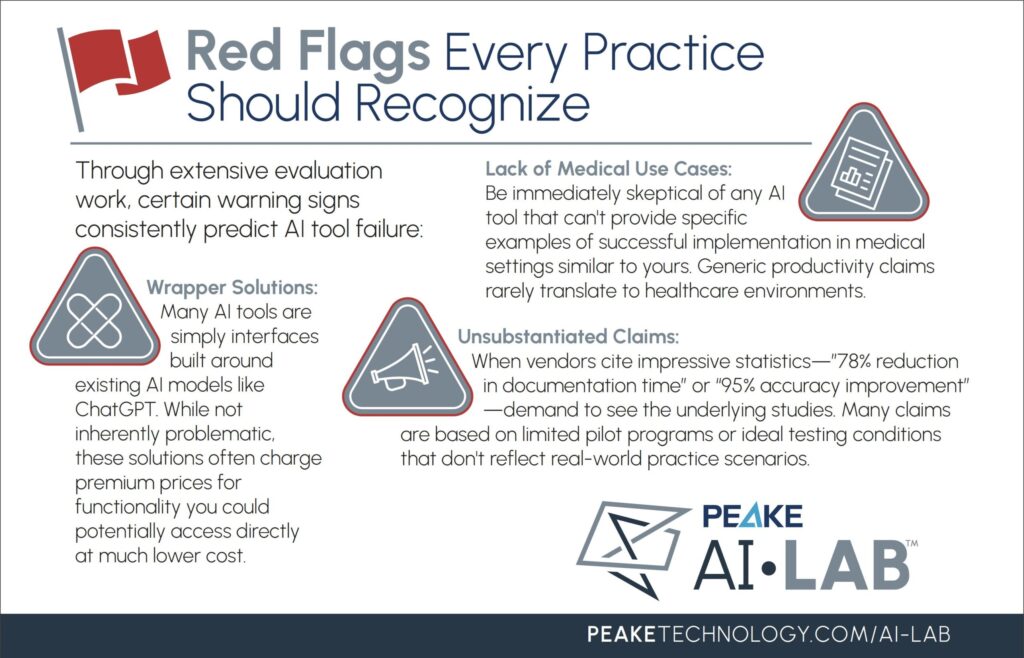

Red Flags Every Practice Should Recognize

Through extensive evaluation work, certain warning signs consistently predict AI tool failure:

Lack of Medical Use Cases: Be immediately skeptical of any AI tool that can’t provide specific examples of successful implementation in medical settings similar to yours. Generic productivity claims rarely translate to healthcare environments.

Wrapper Solutions: Many AI tools are simply interfaces built around existing AI models like ChatGPT. While not inherently problematic, these solutions often charge premium prices for functionality you could potentially access directly at much lower cost.

Unsubstantiated Claims: When vendors cite impressive statistics—”78% reduction in documentation time” or “95% accuracy improvement”—demand to see the underlying studies. Many claims are based on limited pilot programs or ideal testing conditions that don’t reflect real-world practice scenarios.

The Questions That Matter

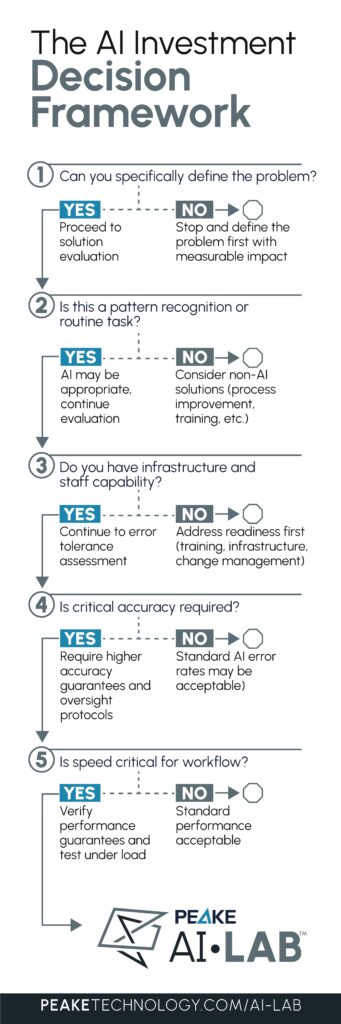

Before scheduling any demo, practices should ask themselves five fundamental questions:

- What specific problem are we trying to solve? Vague goals like “improve efficiency” lead to poor tool selection. Define the exact workflow challenge, quantify the current impact, and establish measurable success criteria.

- Is AI the right solution? AI excels at pattern recognition and routine tasks but struggles with complex decision-making and situations requiring personal touch. Ensure your problem aligns with AI’s strengths.

- What does our current workflow support? Consider your existing technology infrastructure, staff technical capabilities, and resistance to change. The best AI tool is useless if your team won’t adopt it.

- Can we tolerate AI errors? All AI systems have failure rates. For tasks where accuracy is critical, understand the error rate and have mitigation strategies in place.

- Do we need real-time processing? Many AI tools require internet connectivity and can be slow. Ensure performance expectations align with your practice’s operational needs.

How to Demo an AI Product

When you do engage with vendors, structure demos to reveal real-world performance rather than idealized scenarios. Insist on seeing the tool handle your actual data types, with your typical patient volumes, using scenarios that match your daily workflows.

Ask the hard questions during demos: What if AI generates inaccurate information? How do you handle edge cases? What’s the learning curve for staff? How quickly can we get support when issues arise? Also inquire about how the AI model was trained, what data sets were used, and how the system improves over time with use. The vendor’s responses to challenging questions often reveal more than their polished presentations.

Evaluating the Team Behind the Technology

The company behind the AI tool matters as much as the technology itself. Look for leadership teams with genuine healthcare experience—either physician-led organizations or companies with significant clinical advisory input. Teams that understand healthcare operations from the inside are more likely to build solutions that work in real clinical environments.

Support infrastructure is equally critical. What happens when the tool malfunctions during a busy patient day? Is phone support available, or are you limited to email tickets? Many practices discover after implementation that support is inadequate, leaving them struggling when problems arise during critical patient care moments.

The Path Forward

The AI transformation of healthcare is real and accelerating. Practices that thoughtfully adopt appropriate AI tools will gain significant competitive advantages in efficiency, accuracy, and patient satisfaction. But success requires moving beyond vendor marketing to rigorous, independent evaluation.

Start by clearly defining your practice’s specific challenges. Research multiple solutions. Demand demonstrations with real data. Talk to other practices using the tools you’re considering. Most importantly, pilot any solution before full implementation, with clear metrics for measuring success.

The difference between transformative AI adoption and costly disappointment comes down to one thing: the quality of your evaluation process. The practices that commit to rigorous, independent assessment of AI tools will build genuine competitive advantages while others struggle with solutions that promise much and deliver little. Your patients deserve better than vendor-driven decisions. Your practice deserves tools that actually work.